User-Guided Image Editing

Diffusion-Based Image Editing with Vision-Language Instructions

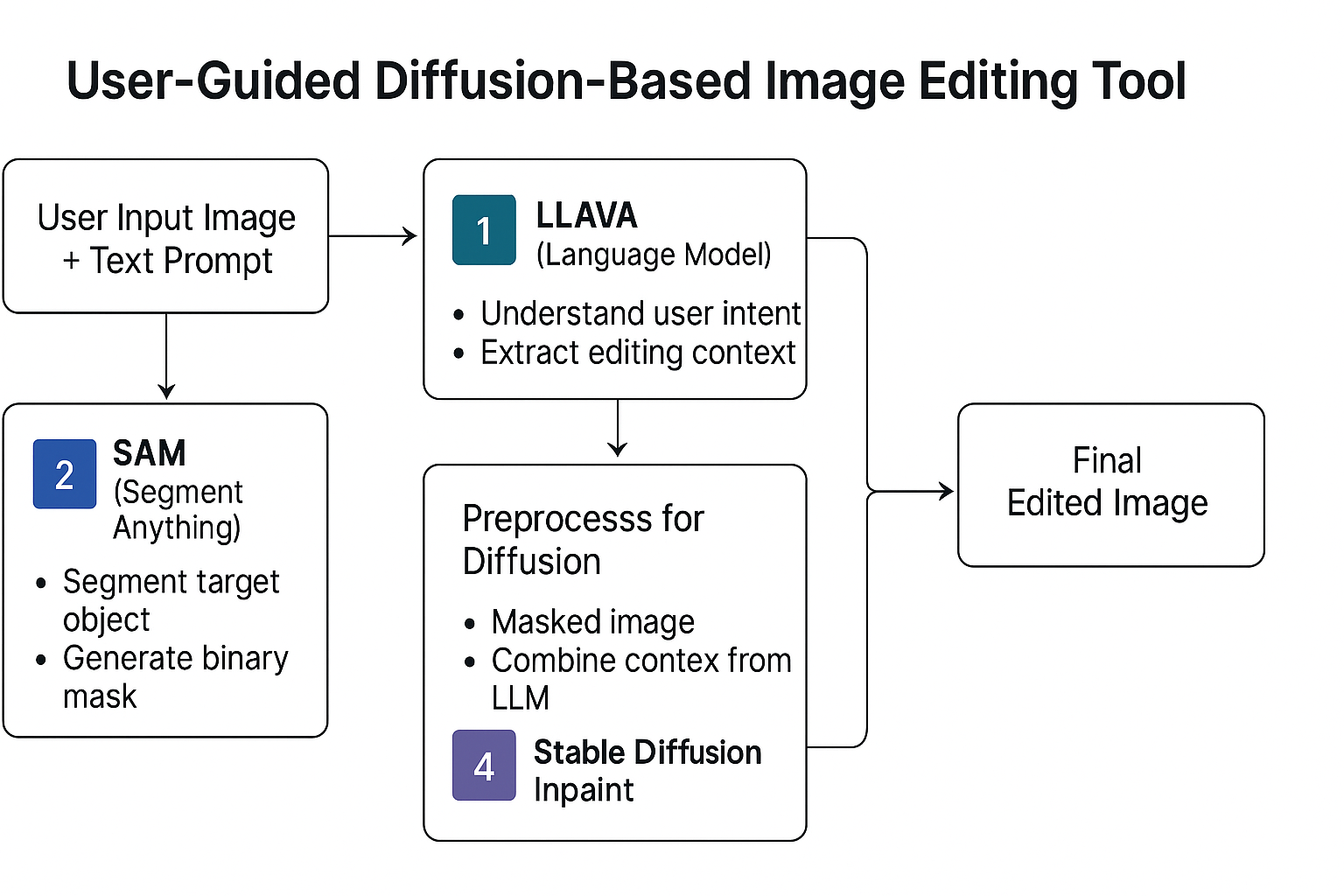

User-Guided Diffusion-Based Image Editing introduces an intuitive image manipulation pipeline that leverages natural language instructions to edit images with fine spatial precision and semantic alignment. This framework integrates:

- 🗣️ LLAVA (Language Processor): Interprets user instructions and extracts spatial/semantic cues from both image and text.

- 🧠 SAM (Segmenter): Identifies and masks target regions guided by LLAVA's attention or grounding hints.

- 🎨 Stable Diffusion (Editor): Applies localized image edits guided by the prompt and segmentation mask for coherent and high-quality results.

The system supports a wide range of image edits (e.g., object replacement, color change, background modification) by translating free-form user prompts into targeted modifications — combining precision from segmentation with creativity from diffusion models.

📄 Project Paper: Download PDF

The pipeline follows a three-stage editing process:

- Instruction Parsing: LLAVA processes user input and identifies relevant regions and semantics.

- Segmentation Guidance: SAM generates masks for target objects or regions based on visual and textual cues.

- Prompt-Guided Editing: The masked region is edited using a diffusion model that generates coherent outputs matching the instruction.

This architecture empowers non-expert users to achieve high-quality, semantically-aligned edits by combining visual grounding, segmentation, and generative modeling in a unified pipeline.

🔖 References

- H. Liu et al., “LLAVA: Large Language and Vision Assistant,” arXiv:2304.08485, 2023.

- M. Kirillov et al., “Segment Anything,” arXiv:2304.02643, 2023.

- R. Rombach et al., “High-Resolution Image Synthesis with Latent Diffusion Models,” CVPR, 2022.

- K. Zhang et al., “Text-Guided Image Inpainting with Masked Diffusion,” CVPR, 2023.

- J. Lu et al., “Unified Vision-Language Interface for Image Editing,” arXiv:2310.12345, 2023.