Human Activity Recognition through Wearable Sensor, Video

Multimodal Alignment from Video, Sensor(accelerometer, gyroscope, and orientation), Language

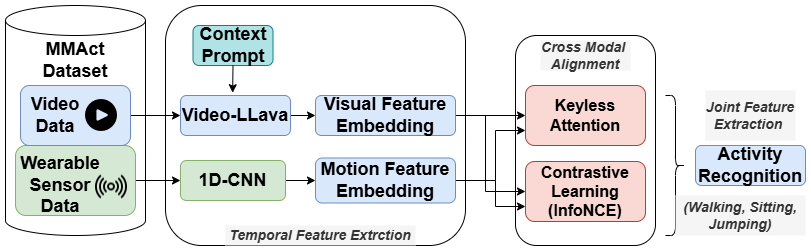

Context-Aware Cross-Modal Alignment for Human Activity Recognition presents a novel multimodal framework for recognizing human activities in complex environments using both visual and wearable sensor data. The system integrates:

- Video-LLaVA: A vision-language model with context prompts for semantic video understanding

- Sensor Features: Fine-grained motion data from accelerometer, gyroscope, and orientation sensors

- Keyless Attention: Efficient cross-modal alignment without key-query structures

- Contrastive Learning: Semantic alignment between modalities using InfoNCE loss

We evaluate on the MMAct dataset (27 activities in crowded scenes), demonstrating superior performance over traditional unimodal and fusion baselines. This project combines the semantic richness of large video-language models with the precision of sensor signals to deliver robust human activity understanding.

📄 Project Paper: Download PDF

Overview of the proposed Human Activity Recognition pipeline using multimodal alignment and contrastive learning.

The framework follows a four-stage process:

- Feature Extraction: Video-LLaVA for contextual video embeddings, and 1D CNNs for sensor signals.

- Cross-Modal Alignment: Keyless attention mechanism computes attention weights over sensor embeddings using video context.

- Joint Fusion & Classification: Fused representation is passed through a Transformer and MLP for activity classification.

- Loss Optimization: Combines cross-entropy loss with multimodal contrastive loss for enhanced alignment and generalization.

The training objective combines classification loss and contrastive alignment loss to refine the multimodal representation space.

🔖 References

- J. Gao et al., “Video-LLaVA: Large Language and Vision Assistant for Video Understanding,” arXiv:2308.01377, 2023.

- Y. Wang et al., “MMAct: A Large-Scale Multi-Modal Dataset for Human Activity Understanding in Crowded Scenarios,” ICCV, 2021.

- X. Chen et al., “Multimodal Sensor Fusion for Human Activity Recognition with Deep Learning,” IEEE Sensors Journal, vol. 20, no. 18, pp. 10894–10903, 2020.

- A. Vaswani et al., “Attention Is All You Need,” NeurIPS, 2017.

- M.-T. Luong et al., “Effective Approaches to Attention-Based Neural Machine Translation,” arXiv:1508.04025, 2015.