FedBalanceTTA

Class Imbalance Mitigation Federated Test-Time Adaptation

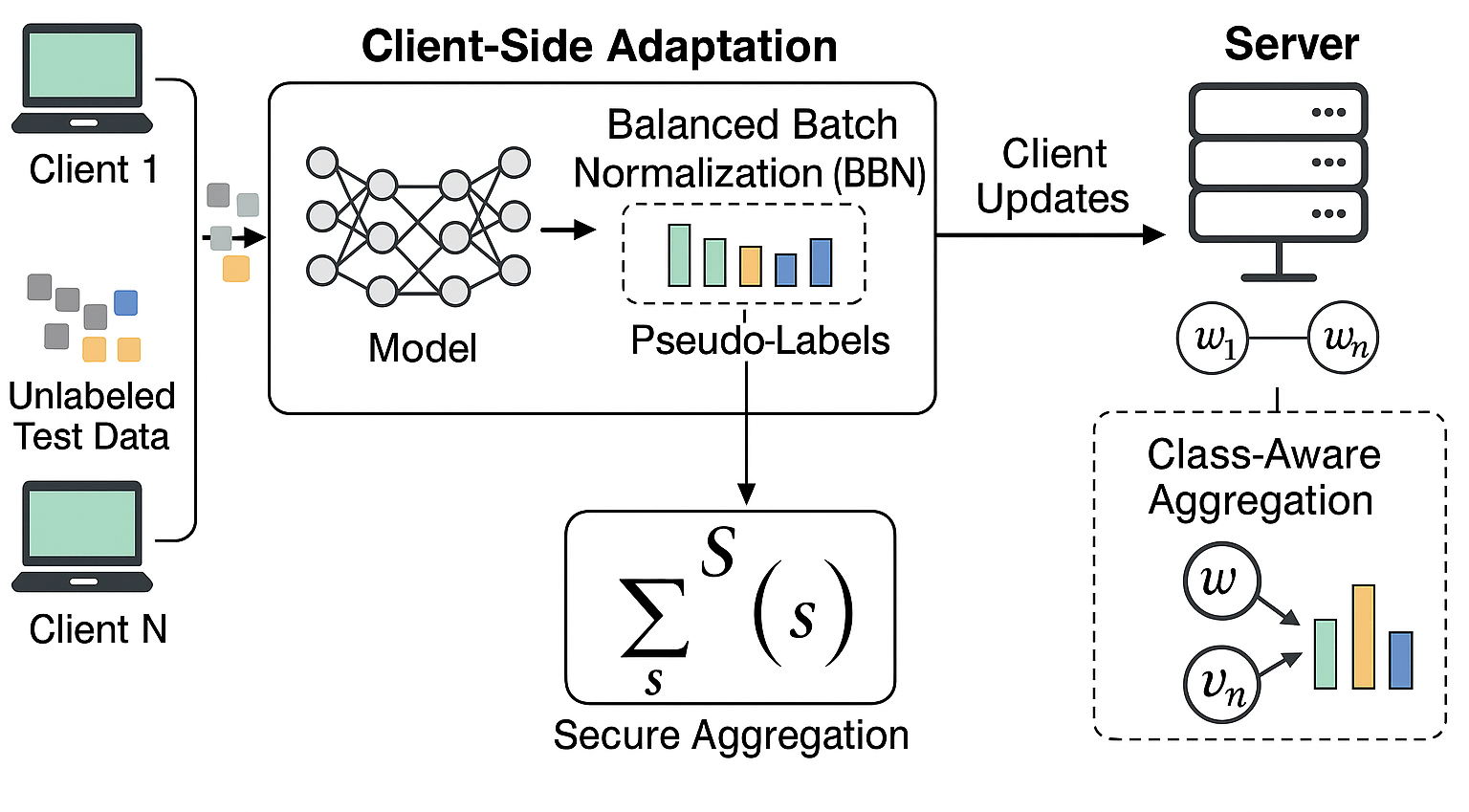

FedBalanceTTA: Federated Learning with Balanced Test-Time Adaptation presents a novel solution to adapt models during inference in federated settings—especially when encountering class imbalance and domain shift in unlabeled test data streams. The framework supports privacy-preserving client-side adaptation without access to ground-truth labels.

- Balanced Batch Normalization (BBN): Computes class-wise normalization statistics from pseudo-labels to mitigate bias toward dominant classes.

- Unsupervised Test-Time Adaptation: Entropy minimization and confident pseudo-labeling optimize the model during inference.

- Class-Aware Server Aggregation: Clients are weighted by class distribution skew to preserve minority-class performance during global update.

- Secure Aggregation: Client updates are privately shared using secure protocols, ensuring privacy of class distributions and raw data.

Extensive experiments on CIFAR-10-C and CIFAR-100-C benchmarks under multiple corruption and imbalance settings demonstrate that FedBBN outperforms state-of-the-art federated and TTA baselines (e.g., TENT, CoTTA, RoTTA).

📄 Project Paper: Download PDF

Overview of the proposed FedBBN pipeline showing client-side balanced adaptation, pseudo-label guided normalization, secure aggregation, and class-aware server updates.

The framework follows a three-stage process:

- Client-Side Adaptation: Pseudo-labels are generated using the current model; BBN layers normalize features with per-class statistics.

- Secure Aggregation: Clients encrypt model updates and send them to the server without exposing sensitive distribution or raw data.

- Class-Aware Global Aggregation: Server aggregates updates using a class-balance-aware weighting to produce a robust, fair global model.

Each client adapts in isolation, and model updates are securely aggregated for bias-free global refinement.

🔖 References

- Md. Akil Raihan Iftee et al., “FedBalanceTTA – Federated Learning with Balanced Test Time Adaptation,” ICONIP 2025 (Accepted).

- Wang et al., “Tent: Fully Test-Time Adaptation by Entropy Minimization,” ICLR, 2021.

- Wang et al., “Continual Test-Time Domain Adaptation,” CVPR, 2022.

- Yuan et al., “Robust Test-Time Adaptation in Dynamic Scenarios,” CVPR, 2023.

- Shao et al., “Federated Face Anti-Spoofing with Test-Time Adaptation,” FG, 2021.